In the Tutorial of IJCAI2016, Microsoft Research Institute described its application of deep learning and deep neural networks to different scenarios. The second part mentioned the application of deep learning in statistical machine translation and conversation. The third part is the selection of natural language. Continuous expression of processing tasks. The fourth part is the understanding of natural language and the expression of continuous language words.

Joint Editor: Li Zun, Zhang Min, Chen Chun

Natural language understanding focuses on the creation of intelligent systems that can interact with humans using natural language. Its research challenges: 1) Expression of textual meaning 2) Support for useful reasoning tasks.

Consecutive words are expressed with the emphasis on knowledge base embedding and knowledge based answering & machine understanding.

Consecutive expressions include:

l Many popular ways to create word vectors

l Coding information of encoding conditions

l Measuring semantically similar wells

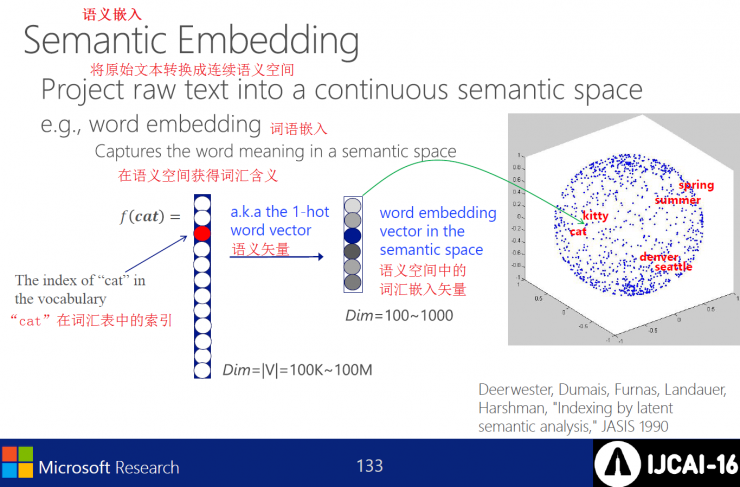

Semantic embedding transforms raw text into continuous semantic space

The reason for embedding is that:

l Similarity of lexical semantic words

l Simple semantic expression of text

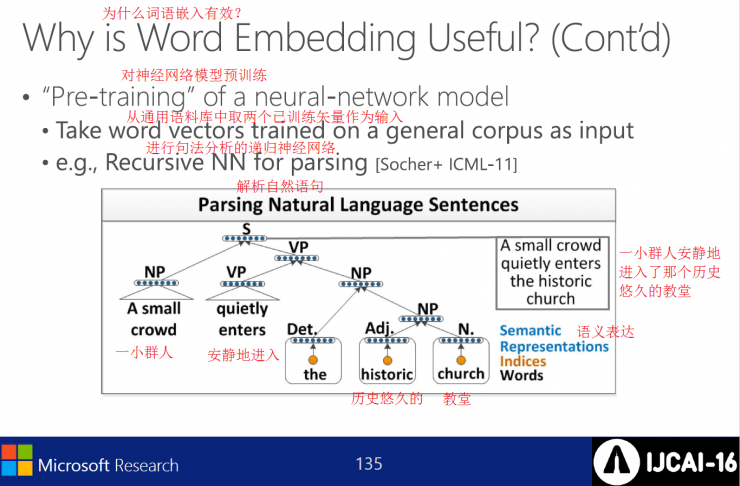

Pre-training neural network models

Words embedded model sample, evaluation, related work

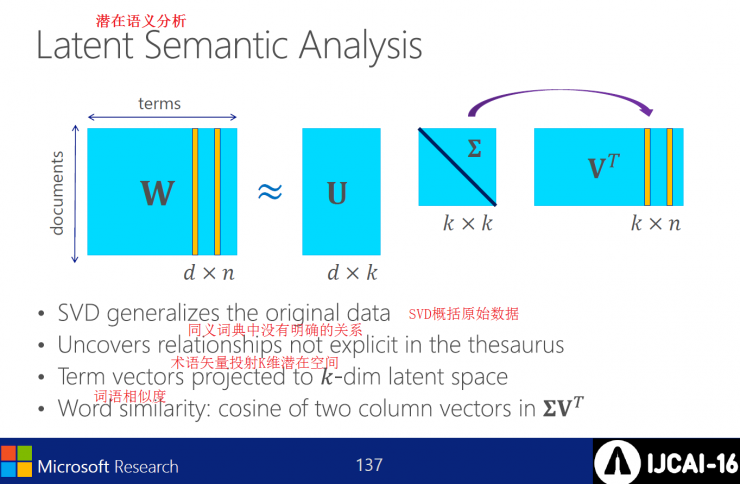

Latent semantic analysis includes: SVD sums raw data, there is no explicit relation in synonymous dictionaries, term vector projected K-dimensional latent space, similarity of words, etc.

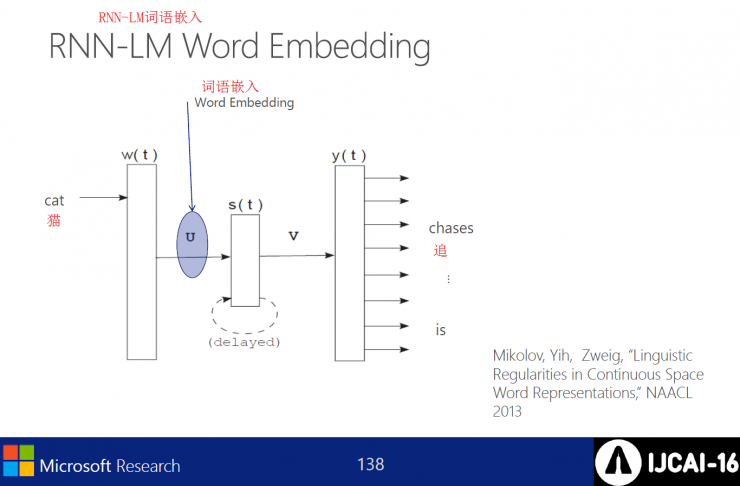

RNN-LM word embedding

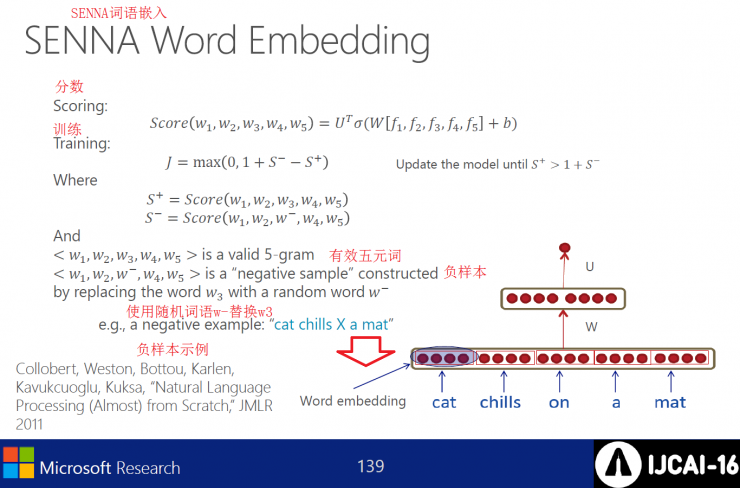

SENNA word embedding

CBOW/Skip-gram words embedding

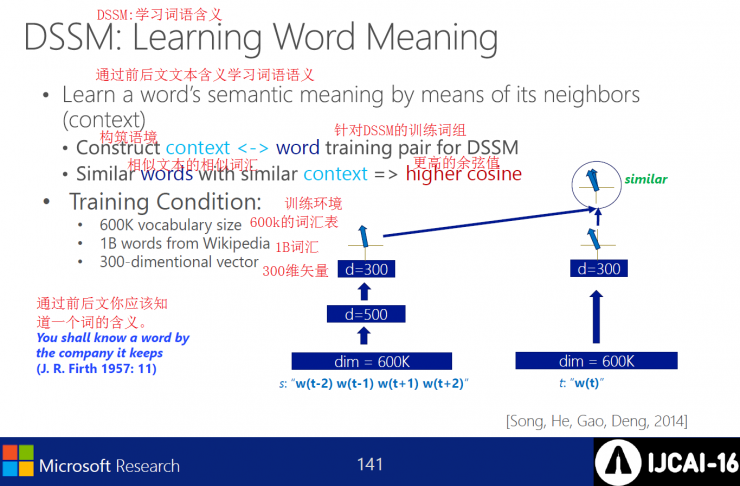

DSSM: Learning the Meaning of Words

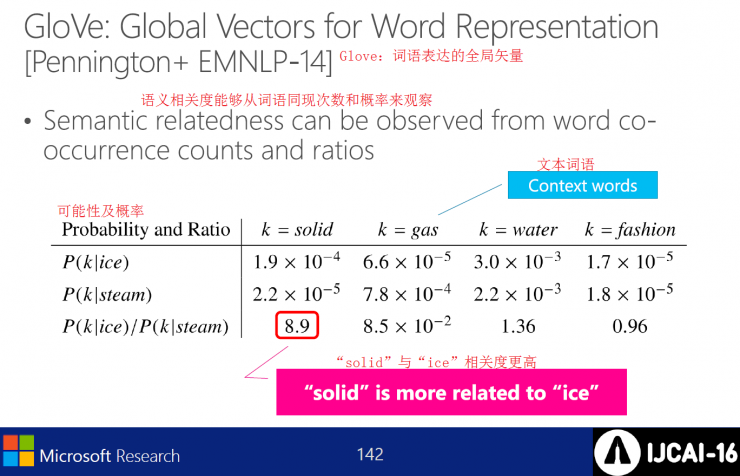

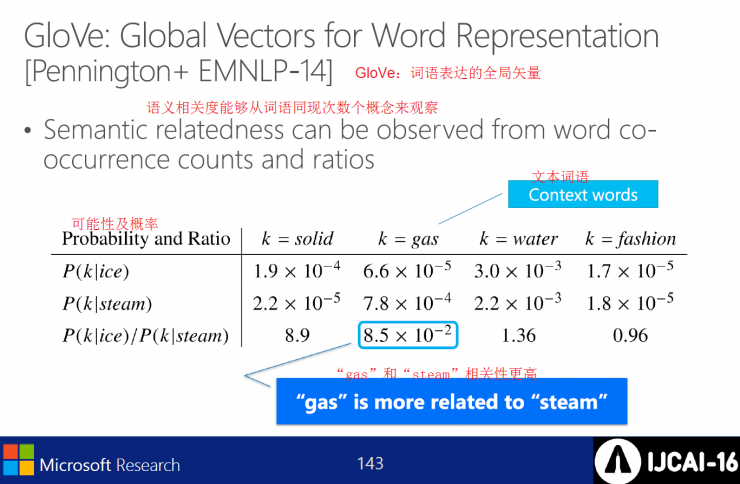

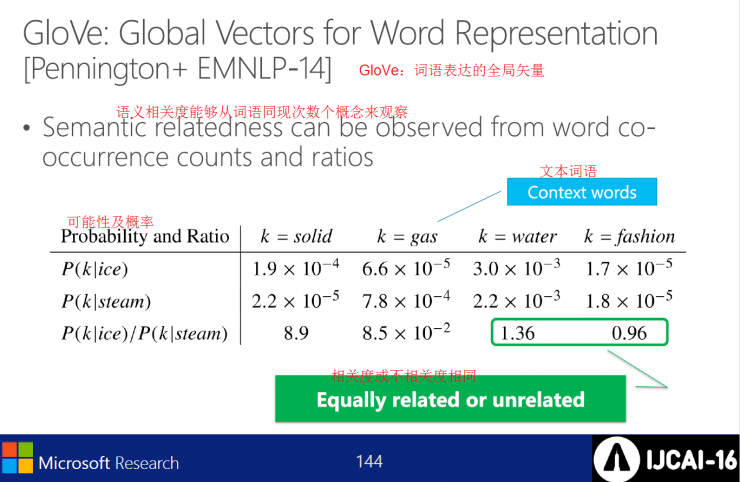

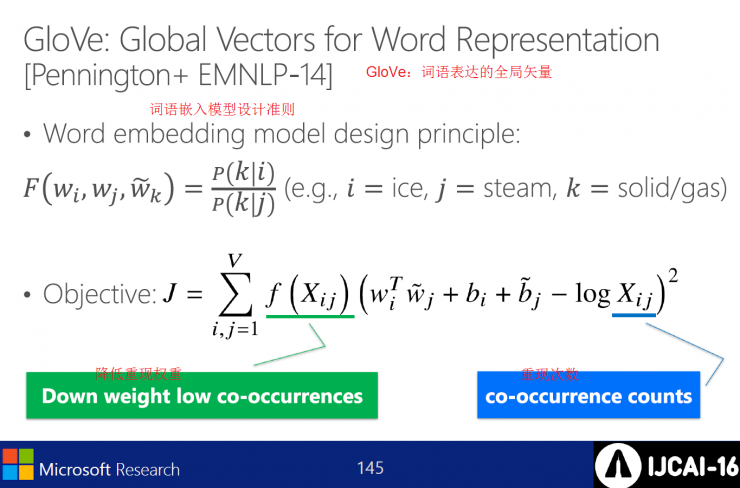

GloVe: Global vector of word expression

Semantic relevance can be observed from the concept of word co-occurrence times

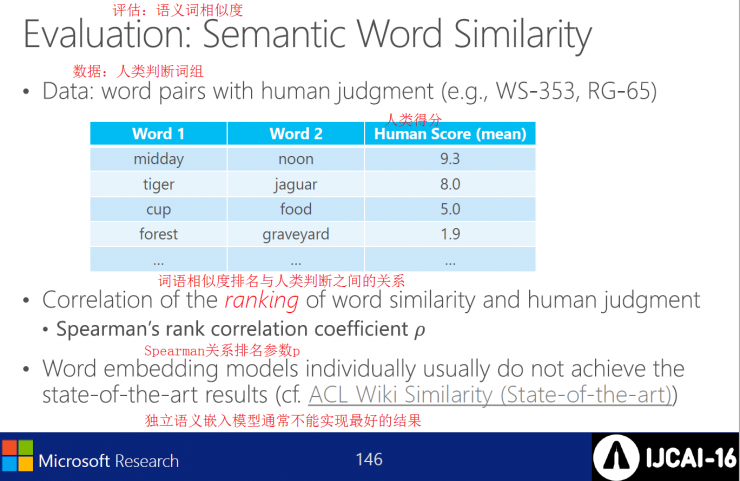

Evaluation: Semantic Word Similarity

l Data: Human judgment phrases

l The relationship between word similarity ranking and human judgment

l Independent Semantic Embedding Models usually cannot achieve the best results

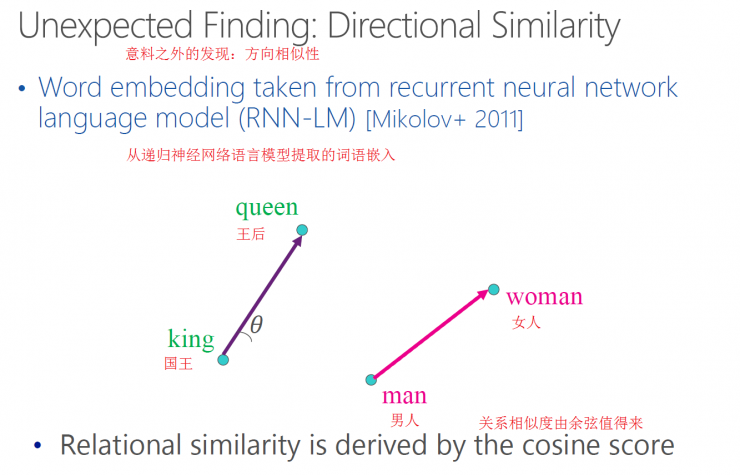

Evaluation: Relational Similarity

Do you judge whether the two groups of words have the same relationship and why it works?

Unexpected discovery: Words extracted from the recursive neural network semantic model have similar cosine worthiness.

Experimental results

Similar results on other datasets

Vocabulary analogy assessment.

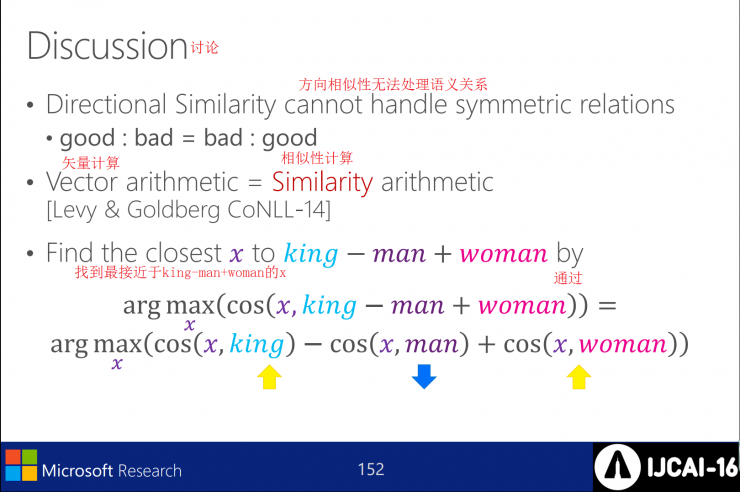

discuss. 1. Directional similarity cannot handle semantic relations; 2. Vector calculation = Similarity calculation 3. Find the closest x by calculation.

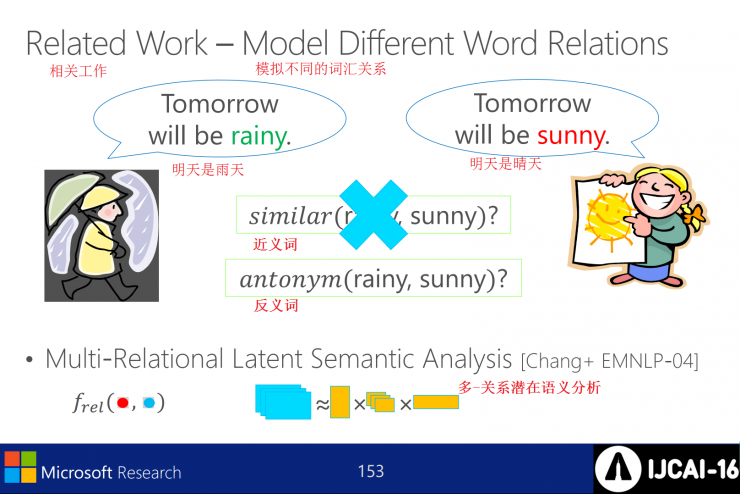

Some related work-simulating different vocabulary relationships such as: judgement is a synonym or a synonym.

Related work - lexical embedded models such as: other lexical embedded models; Word2Vec analysis and direction similarity; theoretical argumentation and unification; evaluation of NLP vector space representation.

Neurolinguistic understanding.

Knowledge Base: Capture world knowledge by storing the performance of millions of entities and the relationship between them.

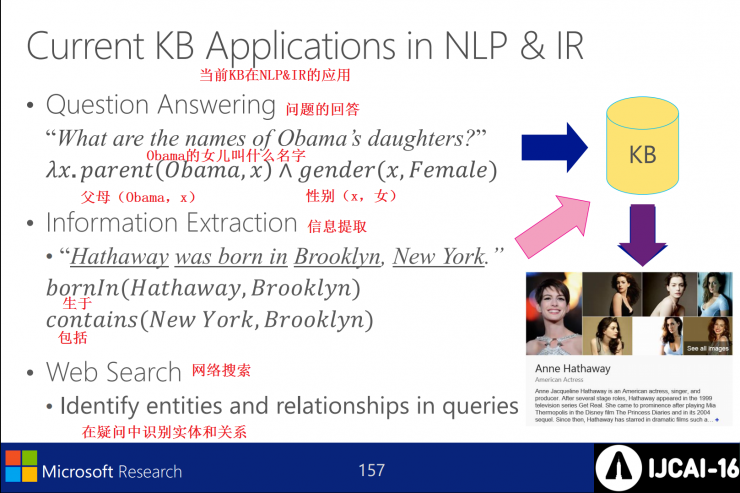

The KB's application in NLP&IR - answer questions, information extraction, web search.

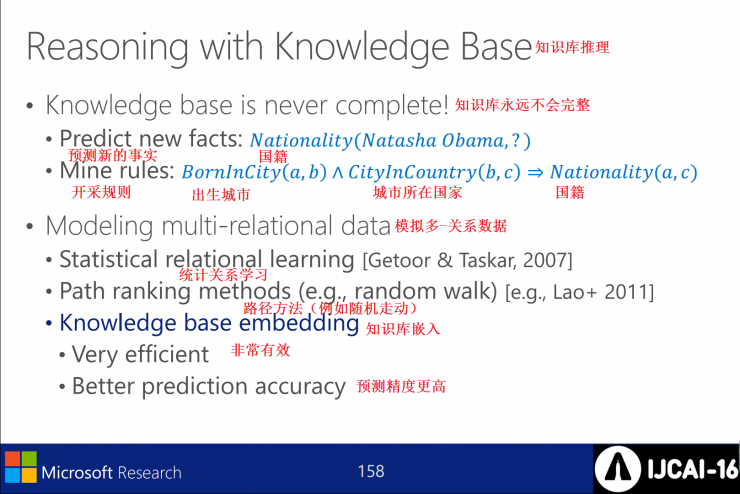

Knowledge-based reasoning - The knowledge base will never be complete, simulates multiple relational data, and the knowledge base is embedded with greater efficiency and accuracy.

Knowledge base embedding: Every entity in KB is represented by a Rd vector, and it is predicted by fr(Ve1, Ve2) whether (e1, r, e2) is correct. Most of the work on KB embedding: tensor decomposition, neural networks.

Tensor Decomposition - Knowledge Base Representation (1/2): Collection - Main - Predicate - Bin - (e1, r, e2)

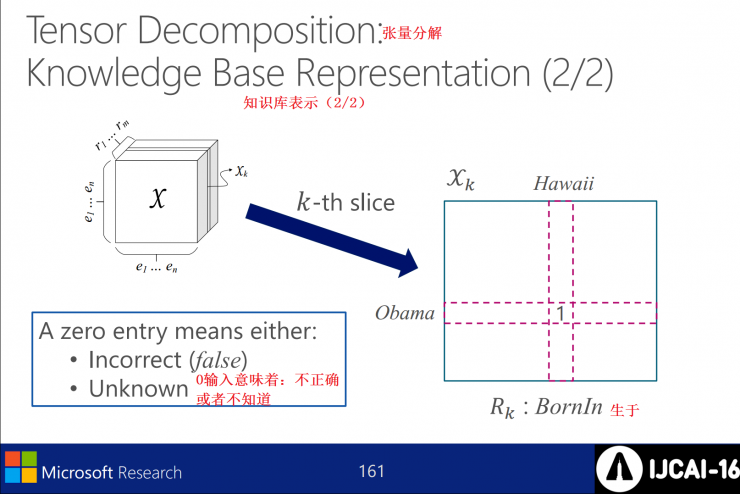

Tensor decomposition - knowledge base representation (2/2): 0 input means incorrect or unknown

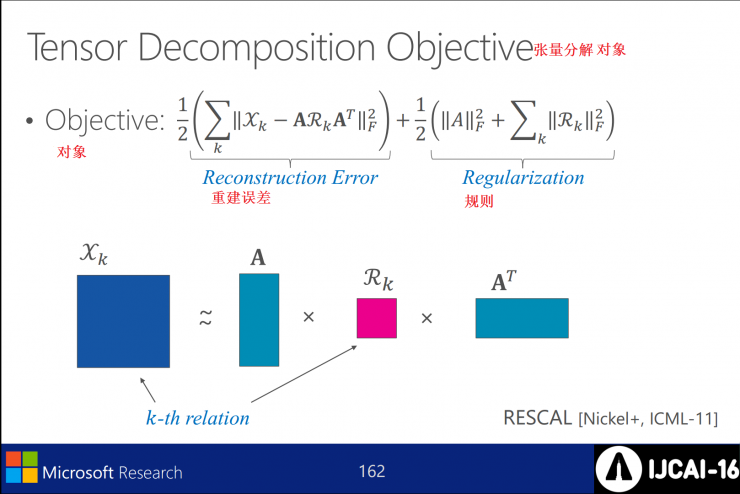

Tensor decomposition object

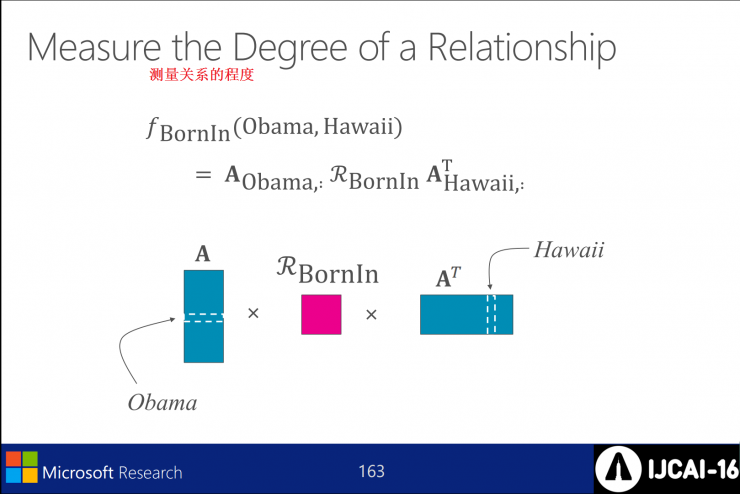

Measuring the degree of relationship

Typing Tensor Decomposition: The main knowledge of relationships is the type of information, the only legitimate entity in constraints and loss. There are three benefits to using the typed information: Model training time is short, large KB can be highly scalable, and prediction accuracy is higher.

Typing tensor decomposition object reconstruction error

Join tensor decomposition object reconstruction error

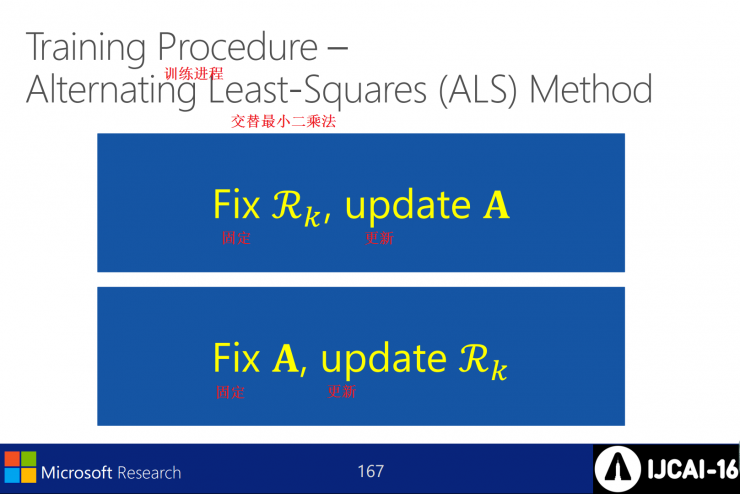

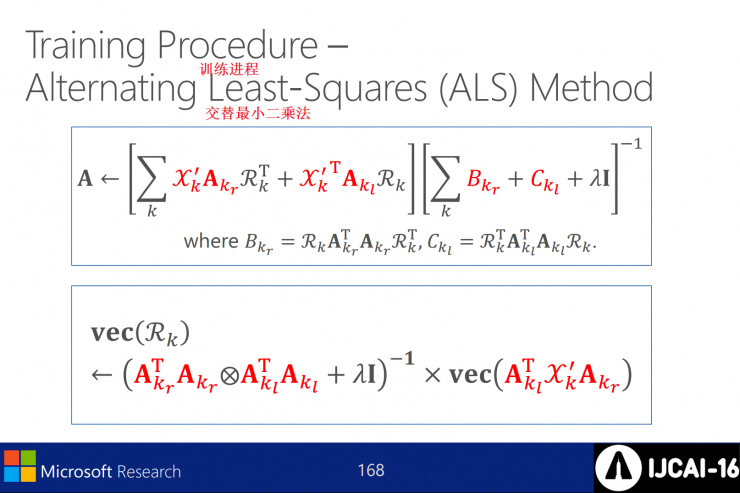

Training Process - Alternate Least Squares

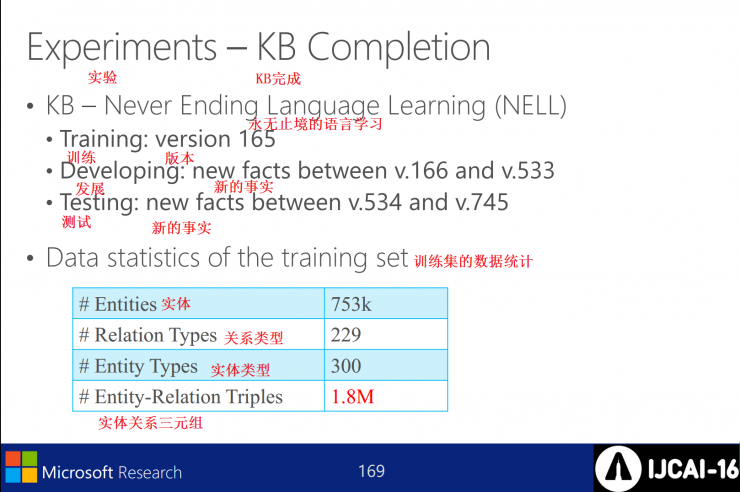

Experiment - KB Complete

Entity retrieval

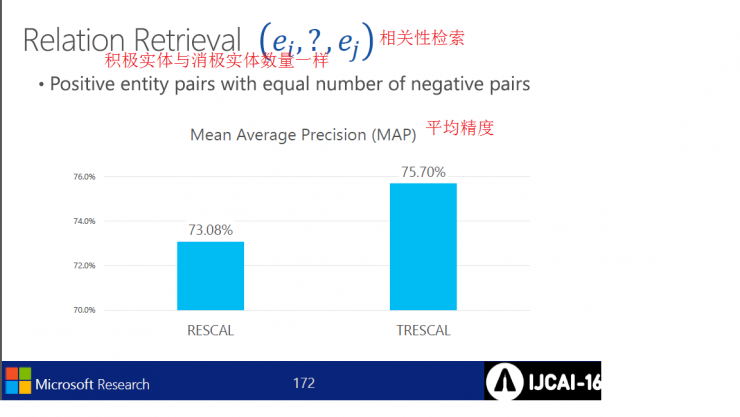

Relevance retrieval and its average accuracy

Knowledge Base Embedded Mode

Evaluation function and its parameters for related operations

An empirical comparison of KB embedded methods based on neural networks: better performance with fewer parameters; bilinear operators are more critical; multiplication is preferred over addition when modeling; pre-trained phrases and embedded vectors are critical for performance.

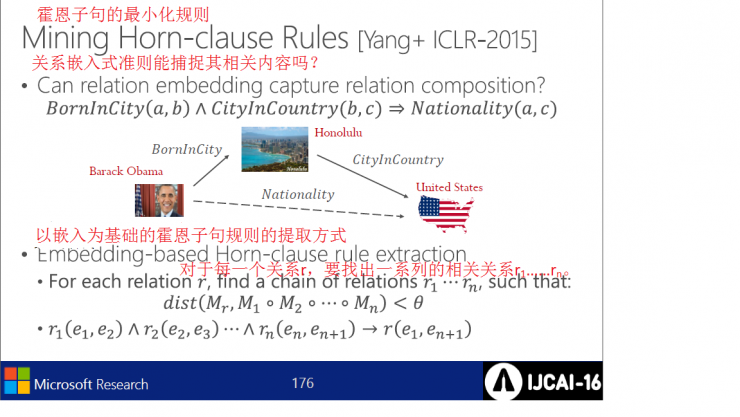

Horn Clause Minimization Rule

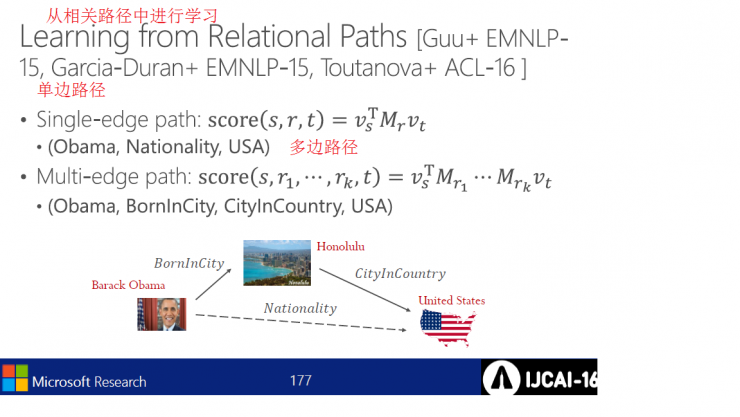

Learning in related paths

Natural language understanding

Continuous word expression and lexical semantics

Knowledge base embedded

KB-based question answering and machine understanding

Semantic Analysis

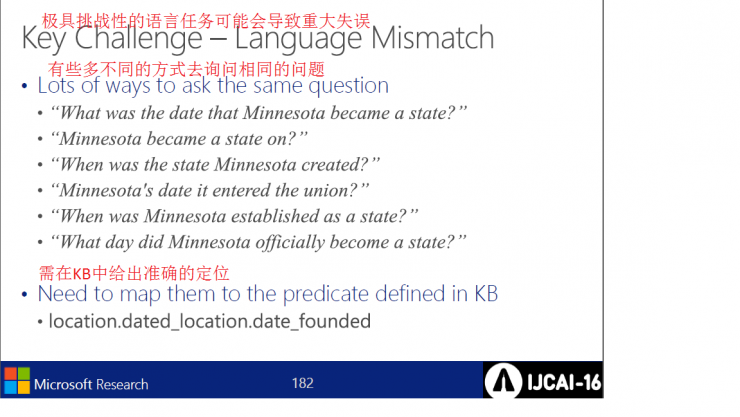

Extremely challenging language tasks can lead to major errors

Extremely challenging language tasks can lead to major errors

Extremely challenging language tasks can lead to major errors

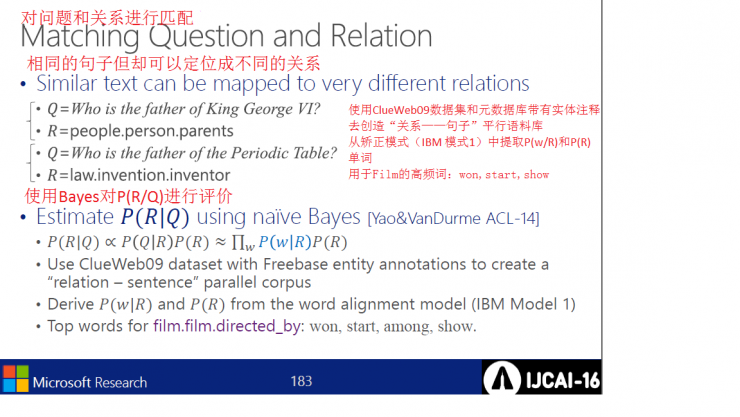

There are three methods for problem-matching: semantic analysis through paraphrase; creation of phrase-matching features using a word list derived from the word proofreading results; and problems expressed in vectors.

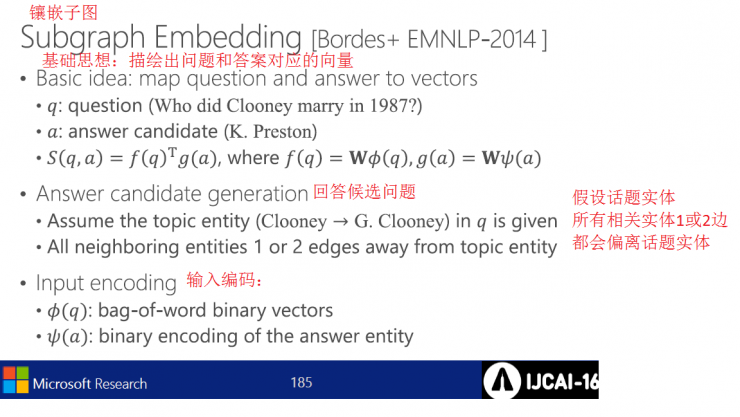

Mosaic subpattern mode

Use DSSM to determine inference chain

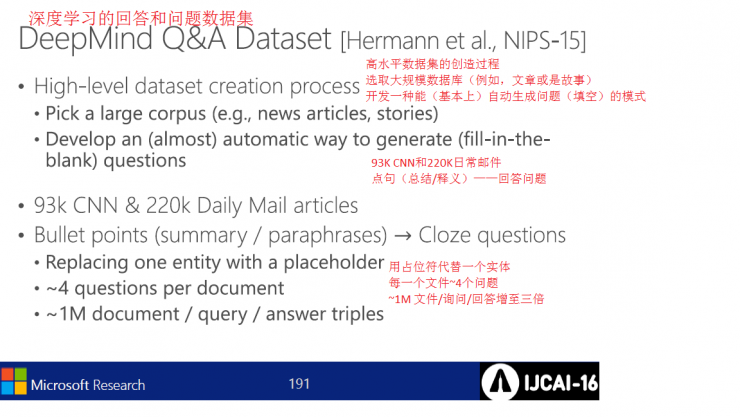

Deep learning answer and question data sets.

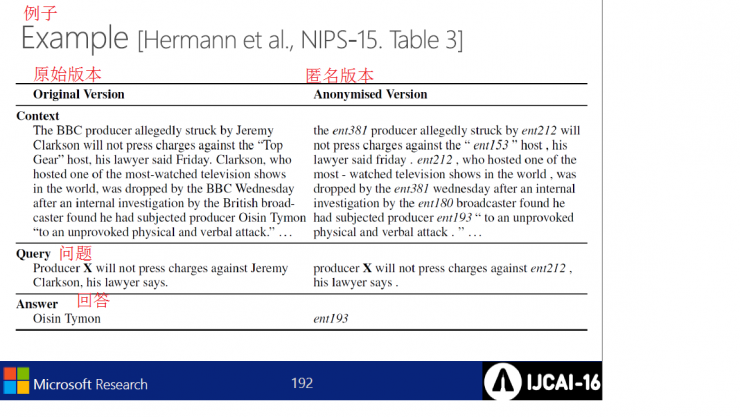

Compare the original version with the anonymous version.

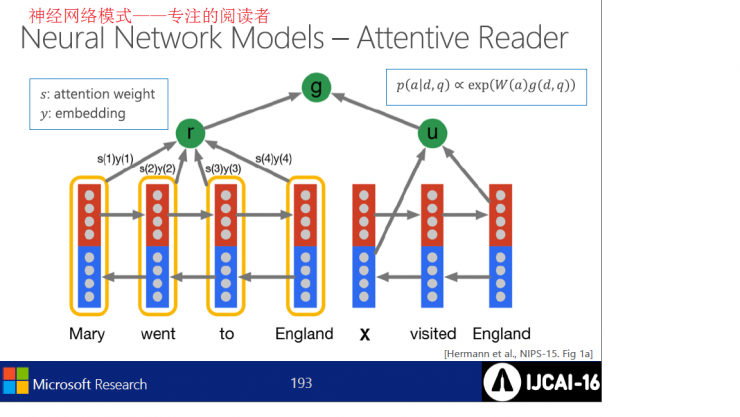

Attentive Reader's concrete operation structure diagram in neural network mode.

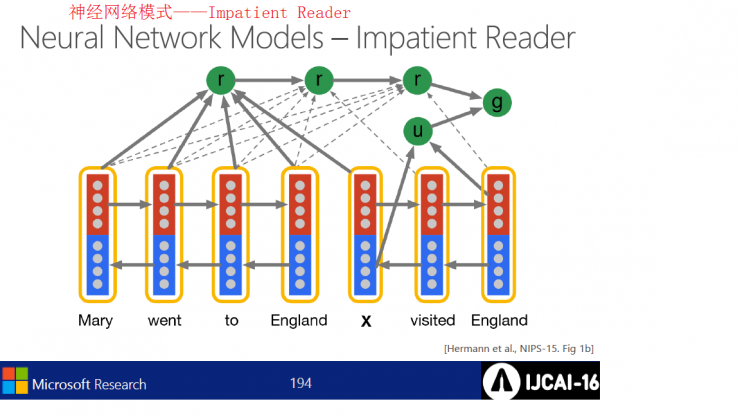

The structure of the Impatient Reader in the neural network structure.

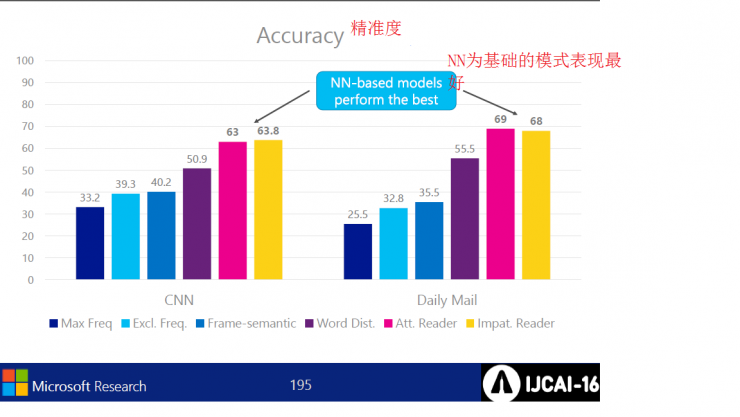

Comparing the accuracy of each model's running results, the NN-based model performed the best.

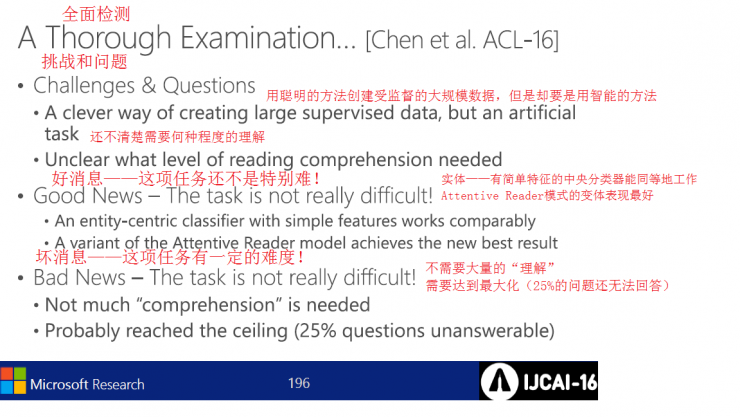

A comprehensive review of all issues found that the problem lies in the need to use smart methods to create large-scale supervised data and to understand the degree of understanding. In addition, the good news is that entities can work equally and the Attentive Reader model performs best. The bad news is that the task is more difficult and needs to be optimized (25% of the questions still cannot be answered).

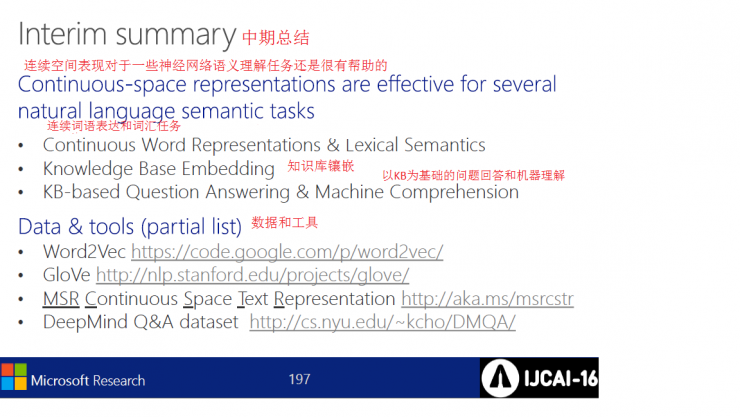

Continuous spatial performance is still helpful for some neural network semantic understanding tasks; for example, continuous word expression and vocabulary tasks, knowledge base mosaicing, KB-based question answering and machine understanding.

Great progress has been made in NN and continuous expression, for example, text processing and knowledge inference.

For the future outlook put forward the following aspects:

Create a universal smart space

Text, knowledge and reasoning, etc.

From component mode to end-to-end solution.

to sum up:

Natural language understanding focuses on building intelligent systems that can interact with humans using natural language. In addition, continuous word expression and lexical semantics are needed.

Consecutive words are expressed with the emphasis on knowledge base embedding and knowledge based answering & machine understanding.