[Editor's note] This article is from CMU's Ph.D., MIT's postdoctoral, vision.ai co-founder Tomasz Malisiewicz's personal blog post, reading this article, you can better understand what computer vision is all about, while learning about machine learning is There is also an intuitive understanding of how to develop slowly over time.

The following is the text:

In this article, we focus on the three very relevant concepts (deep learning, machine learning, and pattern recognition) and their connections to the hottest technology topics (robots and artificial intelligence) of 2015.

Figure 1 Artificial intelligence does not put people in a computer (images from WorkFusion's blog)

Surrounded by it, you will find that there is no shortage of start-up high-tech companies to recruit machine learning experts. Only a small part of them require deep learning experts. I bet that most startups can benefit from the most basic data analysis. So how can we discover future data scientists? You need to learn how they think.

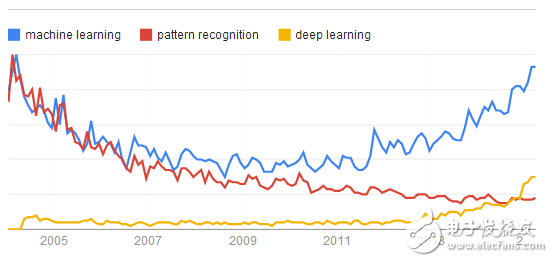

Three popular vocabulary related to "learning"Pattern Recognition (Pattern recogniTIon), machine learning (machine learning) and deep learning (deep learning) on ​​behalf of three different schools of thought. Pattern recognition is the oldest (as a term, it can be said to be very outdated). Machine learning is the most basic (one of the hot spots of current startups and research laboratories). And deep learning is a very new and influential frontier, and we won't even think about the post-deep learning era. We can look at the Google Trends shown below. can be seen:

1) Machine learning continues to be like a real champion;

2) Pattern recognition was originally used as a synonym for machine learning;

3) Pattern recognition is slowly falling and dying;

4) Deep learning is a new and fast-rising field.

Three concepts of Google search index from 2004 to the present (picture from Google Trends)

1. Pattern recognition: the birth of intelligent programsPattern recognition is a very popular term in the 1970s and 1980s. It emphasizes how to make a computer program do something that looks "smart", such as identifying the number "3". And after incorporating a lot of wisdom and intuition, people have indeed built such a program. For example, distinguish between "3" and "B" or "3" and "8". As early as before, everyone will not care how you achieve it, as long as the machine is not camouflaged by people hiding in the box (Figure 2). However, if your algorithm applies some techniques such as filters, edge detection, and morphological processing to the image, the pattern recognition community will definitely be interested in it. Optical character recognition was born out of this community. Therefore, pattern recognition is called the "smart" signal processing in the 1970s, early 1980s and early 1990s. Decision trees, heuristics, and quadratic discriminant analysis were all born in this era. Moreover, in this era, pattern recognition has also become a small partner in the field of computer science, not electronic engineering. One of the most famous in the field of pattern recognition book was born from this era by Duda & Hart authored "Pattern Recognition (Pattern ClassificaTIon)". For the basic researchers, it is still a good introduction to the textbook. But don't be too entangled with some of the words inside, because this book has been around for a certain age, and the vocabulary will be a bit outdated.

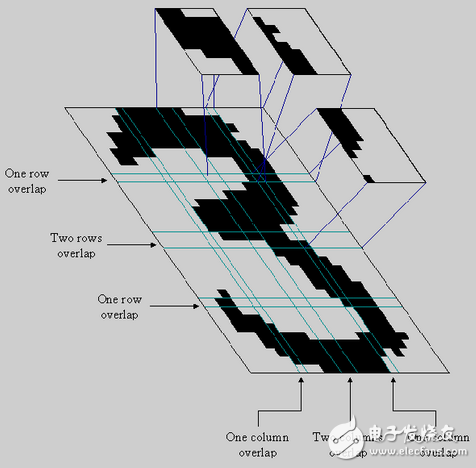

Figure 2 An image of the character "3" is divided into 16 sub-blocks.

Custom rules, custom decisions, and custom "smart" programs have been a hit on this task (more information, check out this OCR page)

Small test: The most famous conference in the field of computer vision is called CVPR. This PR is pattern recognition. Can you guess which year the first CVPR meeting was held?

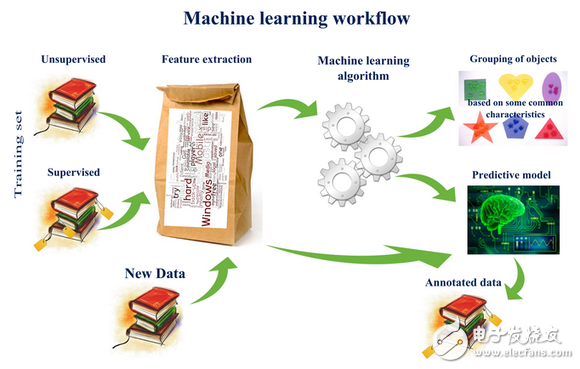

2. Machine Learning: Intelligent Programs Learned from SamplesIn the early 1990s, people began to realize a way to build pattern recognition algorithms more efficiently, which is to replace experts (people with a lot of image knowledge) with data (which can be obtained through cheap labor collection). Therefore, we collect a large number of face and non-face images, then choose an algorithm, then rush to the coffee, basking in the sun, waiting for the computer to complete the learning of these images. This is the idea of ​​machine learning. "Machine learning" emphasizes that after inputting some data to a computer program (or machine), it must do something, that is, to learn the data, and the steps of this learning are clear. Believe me, even if it takes a day for the computer to complete the study, it will be better than the invitation of your research partner to your home and then manually design some classification rules for this task.

Figure 3 Typical machine learning process (pictured from Dr. Natalia KonstanTInova's blog).

In the middle of the 21st century, machine learning became an important research topic in the field of computer science. Computer scientists began to apply these ideas to a wider range of issues, no longer limited to recognizing characters, recognizing cats and dogs, or identifying certain images. Goals and so on. Researchers began applying machine learning to robots (intensive learning, manipulation, action planning, crawling), analysis of genetic data, and predictions in financial markets. In addition, the marriage of machine learning and graph theory has also created a new topic - the graph model. Every robot expert has become a machine learning expert “helplesslyâ€. At the same time, machine learning has quickly become one of the necessary skills that everyone is eager for. However, the concept of "machine learning" does not mention the underlying algorithm. We have seen that convex optimization, kernel methods, support vector machines, and BoosTIng algorithms all have their own brilliant periods. Coupled with some artificial design features, in the field of machine learning, we have a lot of methods, many different schools of thought, however, for a newcomer, the choice of features and algorithms is still confused, not clear Guiding principles. But, fortunately, this is about to change...

Extended reading: To learn more about computer visual features, check out the original author's previous blog post: "From Feature Descriptors to Deep Learning: 20 Years of Computer Vision."

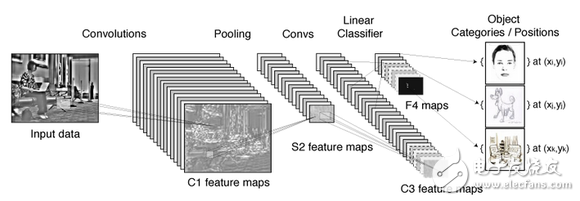

3. Deep learning: the structure of the unified rivers and lakesFast forward to today, we are seeing an eye-catching technology - deep learning. Among the deep learning models, the most favored are the Convolutional Neural Nets (CNN) used in large-scale image recognition tasks, or ConvNets.

Figure 4 ConvNet framework (Figure from Torch's tutorial)

Deep learning emphasizes the models you use (such as deep convolutional multi-layer neural networks), and the parameters in the model are obtained by learning from the data. However, deep learning also brings up some other issues that need to be considered. Because you are dealing with a high-dimensional model (that is, a huge network), you need a lot of data (big data) and powerful computing power (graphics processor, GPU) to optimize this model. Convolution is widely used for deep learning (especially in computer vision applications), and its architecture is often non-shallow.

If you want to learn Deep Learning, you have to review some of the basics of linear algebra. Of course, you also have a programming foundation. I highly recommend Andrej Karpathy's blog post: "Guide to Hacking Neural Networks." In addition, as a starting point for learning, you can choose an application problem that does not use convolution operations, and then implement your own CPU-based back propagation algorithm.

For deep learning, there are still many unresolved issues. There is no complete theory of the effectiveness of deep learning, nor a guide or book that goes beyond the practical experience of machine learning. In addition, deep learning is not a panacea, it has enough reason to be popular, but it can never take over the whole world. However, as long as you continue to increase your machine learning skills, your diet is worry-free. But don't be too admired for deep frameworks. Don't be afraid to tailor and adjust these frameworks to get a software framework that works with your learning algorithms. Future Linux kernels may run on Caffe, a very popular deep learning framework. However, great products always require great vision, domain expertise, market development, and most importantly: human creativity. .

Other related terms1) Big-data: Big data is a rich concept, such as storage containing large amounts of data, mining of hidden information in data, and so on. For business operations, big data can often give some advice on decisions. For machine learning algorithms, its combination with big data has emerged in the early years. Researchers and even any day-to-day developer can access services such as cloud computing, GPU, DevOps, and PaaS.

2) Artificial Intelligence: Artificial intelligence should be the oldest term and the most ambiguous. It has experienced several ups and downs in the past 50 years. When you meet someone who says you are artificial intelligence, you have two choices: either sneer or take a piece of paper and record everything he says.

Extended reading: The original author's 2011 blog: "Computer vision is artificial intelligence."

in conclusionThe discussion about machine learning stays here (don't just think of it as one of deep learning, machine learning, or pattern recognition, these three just emphasize different things), however, research will continue and exploration will continue. We will continue to build smarter software, and our algorithms will continue to learn, but we will only begin to explore the frameworks that can truly unify the rivers and lakes.

If you are also interested in real-time visual applications for deep learning, especially those that are suitable for robotics and home intelligence, please visit our website vision.ai. I hope that in the future, I can say a little more...

About the author: Tomasz Malisiewicz, PhD of CMU, postdoctoral fellow at MIT, co-founder of vision.ai. Concerned about computer vision, a lot of work has been done in this field. In addition, his blog is also rich in information and value, interested in browsing his personal homepage and blog.

With the massive popularity of tablet computers. In order to solve the difference in input speed between the full touch operation mode and the keyboard mode, or to solve the discomfort caused by some people without a keyboard, a new mobile phone/MID/tablet leather case has appeared on the market. This kind of leather case is not only a leather case,The leather case keyboard is a kind of leather case with a USB keyboard or Bluetooth Keyboard that can be connected to and protect mobile phones, MIDs, tablets, etc. After plugging into the USB interface of the relevant device (or connecting via Bluetooth pairing), you can use an external keyboard to type.

Jupu manufacturing tablet keyboard case for ipad, samsung tab keyboard case,bluetooth keyboard case for android,wireless Keyboard Case For Huawei and universal bluetooth keyboard case.Most of our products have gained CE ROSH FCC BQB approvals and are guaranteed with one year warranty ,Our mission is customer frist,provide high quality products with moderate cost .

Keyboard Case,Ipad Keyboard Case,Ipad Air Keyboard,Ipad Mini Keyboard

Dongguan Jupu Electronic Co.,Ltd , https://www.jupumade.com